After almost a year, my VHF station started to misbehave with corrupted waterfalls over the weekend so I took the opportunity to update to a new SD card and all new Satnogs client.

It was a very smooth transition and I was up and running in about 1/2 hour.

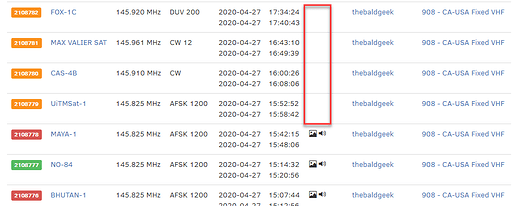

However, after 3 successful observations, the station started to to not show the waterfall.

I sshed into the Pi and found the following;

> pi@satnogs-vhf:/tmp/.satnogs/data $ systemctl status satnogs-client

> ? satnogs-client.service - SatNOGS client

> Loaded: loaded (/etc/systemd/system/satnogs-client.service; enabled; vendor preset: enabled)

> Active: active (running) since Mon 2020-04-27 07:48:57 PDT; 3h 36min ago

> Main PID: 308 (satnogs-client)

> Tasks: 73 (limit: 2200)

> Memory: 641.9M

> CGroup: /system.slice/satnogs-client.service

> +- 308 /var/lib/satnogs/bin/python3 /var/lib/satnogs/bin/satnogs-client

> +- 746 /usr/bin/python3 /usr/bin/satnogs_fsk_ax25.py --soapy-rx-device=driver=rtlsdr --samp-rate-rx=2.048e6 --rx-freq=145825000 --file-path=/tmp/.satnogs/data/receiving_satnogs_2108779_2020-04-

> +- 792 /usr/bin/python3 /usr/bin/satnogs_cw_decoder.py --soapy-rx-device=driver=rtlsdr --samp-rate-rx=2.048e6 --rx-freq=145910000 --file-path=/tmp/.satnogs/data/receiving_satnogs_2108780_2020-0

> +- 850 /usr/bin/python3 /usr/bin/satnogs_cw_decoder.py --soapy-rx-device=driver=rtlsdr --samp-rate-rx=2.048e6 --rx-freq=145961200 --file-path=/tmp/.satnogs/data/receiving_satnogs_2108781_2020-0

> +- 913 /usr/bin/python3 /usr/bin/satnogs_amsat_fox_duv_decoder.py --soapy-rx-device=driver=rtlsdr --samp-rate-rx=2.048e6 --rx-freq=145920000 --file-path=/tmp/.satnogs/data/receiving_satnogs_210

> +- 949 /usr/bin/python3 /usr/bin/satnogs_cw_decoder.py --soapy-rx-device=driver=rtlsdr --samp-rate-rx=2.048e6 --rx-freq=145910000 --file-path=/tmp/.satnogs/data/receiving_satnogs_2108783_2020-0

> +- 988 /usr/bin/python3 /usr/bin/satnogs_cw_decoder.py --soapy-rx-device=driver=rtlsdr --samp-rate-rx=2.048e6 --rx-freq=145855000 --file-path=/tmp/.satnogs/data/receiving_satnogs_2108784_2020-0

> +-1023 /usr/bin/python3 /usr/bin/satnogs_amsat_fox_duv_decoder.py --soapy-rx-device=driver=rtlsdr --samp-rate-rx=2.048e6 --rx-freq=145880000 --file-path=/tmp/.satnogs/data/receiving_satnogs_210

>

> Apr 27 10:34:27 satnogs-vhf satnogs-client[308]: [INFO] Using format CF32.

> Apr 27 10:41:16 satnogs-vhf satnogs-client[308]: usb_claim_interface error -6

> Apr 27 10:41:16 satnogs-vhf satnogs-client[308]: usb_claim_interface error -6

> Apr 27 10:41:16 satnogs-vhf satnogs-client[308]: [INFO] Using format CF32.

> Apr 27 11:00:54 satnogs-vhf satnogs-client[308]: usb_claim_interface error -6

> Apr 27 11:00:54 satnogs-vhf satnogs-client[308]: usb_claim_interface error -6

> Apr 27 11:00:54 satnogs-vhf satnogs-client[308]: [INFO] Using format CF32.

> Apr 27 11:09:42 satnogs-vhf satnogs-client[308]: usb_claim_interface error -6

> Apr 27 11:09:42 satnogs-vhf satnogs-client[308]: usb_claim_interface error -6

> Apr 27 11:09:42 satnogs-vhf satnogs-client[308]: [INFO] Using format CF32.

It sounds a lot like the latter few posts on this issue.

Is there anything I can test / do before I reboot.

I would hate to lose any trouble shooting data with a reboot.

Thanks.