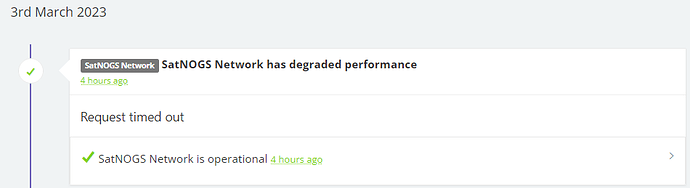

I am wondering why i get sometimes for an indefinite period these errors shown below. It then repeats every minute.

Is there something wrong configured ? I have 1.8.1 running and overvations are successful. I am only curious about all this errors, could someone give me more information about it?

`Mar 03 12:52:59 raspberrypi satnogs-client[427]: satnogsclient.scheduler.tasks - ERROR - An error occurred trying to GET observation jobs from network

Mar 03 12:52:59 raspberrypi satnogs-client[427]: Traceback (most recent call last):

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/var/lib/satnogs/lib/python3.9/site-packages/urllib3/connectionpool.py", line 449, in _make_request

Mar 03 12:52:59 raspberrypi satnogs-client[427]: six.raise_from(e, None)

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "<string>", line 3, in raise_from

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/var/lib/satnogs/lib/python3.9/site-packages/urllib3/connectionpool.py", line 444, in _make_request

Mar 03 12:52:59 raspberrypi satnogs-client[427]: httplib_response = conn.getresponse()

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/usr/lib/python3.9/http/client.py", line 1347, in getresponse

Mar 03 12:52:59 raspberrypi satnogs-client[427]: response.begin()

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/usr/lib/python3.9/http/client.py", line 307, in begin

Mar 03 12:52:59 raspberrypi satnogs-client[427]: version, status, reason = self._read_status()

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/usr/lib/python3.9/http/client.py", line 268, in _read_status

Mar 03 12:52:59 raspberrypi satnogs-client[427]: line = str(self.fp.readline(_MAXLINE + 1), "iso-8859-1")

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/usr/lib/python3.9/socket.py", line 704, in readinto

Mar 03 12:52:59 raspberrypi satnogs-client[427]: return self._sock.recv_into(b)

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/usr/lib/python3.9/ssl.py", line 1241, in recv_into

Mar 03 12:52:59 raspberrypi satnogs-client[427]: return self.read(nbytes, buffer)

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/usr/lib/python3.9/ssl.py", line 1099, in read

Mar 03 12:52:59 raspberrypi satnogs-client[427]: return self._sslobj.read(len, buffer)

Mar 03 12:52:59 raspberrypi satnogs-client[427]: socket.timeout: The read operation timed out

Mar 03 12:52:59 raspberrypi satnogs-client[427]: During handling of the above exception, another exception occurred:

Mar 03 12:52:59 raspberrypi satnogs-client[427]: Traceback (most recent call last):

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/var/lib/satnogs/lib/python3.9/site-packages/requests/adapters.py", line 489, in send

Mar 03 12:52:59 raspberrypi satnogs-client[427]: resp = conn.urlopen(

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/var/lib/satnogs/lib/python3.9/site-packages/urllib3/connectionpool.py", line 787, in urlopen

Mar 03 12:52:59 raspberrypi satnogs-client[427]: retries = retries.increment(

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/var/lib/satnogs/lib/python3.9/site-packages/urllib3/util/retry.py", line 550, in increment

Mar 03 12:52:59 raspberrypi satnogs-client[427]: raise six.reraise(type(error), error, _stacktrace)

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/var/lib/satnogs/lib/python3.9/site-packages/urllib3/packages/six.py", line 770, in reraise

Mar 03 12:52:59 raspberrypi satnogs-client[427]: raise value

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/var/lib/satnogs/lib/python3.9/site-packages/urllib3/connectionpool.py", line 703, in urlopen

Mar 03 12:52:59 raspberrypi satnogs-client[427]: httplib_response = self._make_request(

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/var/lib/satnogs/lib/python3.9/site-packages/urllib3/connectionpool.py", line 451, in _make_request

Mar 03 12:52:59 raspberrypi satnogs-client[427]: self._raise_timeout(err=e, url=url, timeout_value=read_timeout)

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/var/lib/satnogs/lib/python3.9/site-packages/urllib3/connectionpool.py", line 340, in _raise_timeout

Mar 03 12:52:59 raspberrypi satnogs-client[427]: raise ReadTimeoutError(

Mar 03 12:52:59 raspberrypi satnogs-client[427]: urllib3.exceptions.ReadTimeoutError: HTTPSConnectionPool(host='network.satnogs.org', port=443): Read timed out. (read>

Mar 03 12:52:59 raspberrypi satnogs-client[427]: During handling of the above exception, another exception occurred:

Mar 03 12:52:59 raspberrypi satnogs-client[427]: Traceback (most recent call last):

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/var/lib/satnogs/lib/python3.9/site-packages/satnogsclient/scheduler/tasks.py", line 191, in get_jobs

Mar 03 12:52:59 raspberrypi satnogs-client[427]: response = requests.get(url,

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/var/lib/satnogs/lib/python3.9/site-packages/requests/api.py", line 73, in get

Mar 03 12:52:59 raspberrypi satnogs-client[427]: return request("get", url, params=params, **kwargs)

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/var/lib/satnogs/lib/python3.9/site-packages/requests/api.py", line 59, in request

Mar 03 12:52:59 raspberrypi satnogs-client[427]: return session.request(method=method, url=url, **kwargs)

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/var/lib/satnogs/lib/python3.9/site-packages/requests/sessions.py", line 587, in request

Mar 03 12:52:59 raspberrypi satnogs-client[427]: resp = self.send(prep, **send_kwargs)

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/var/lib/satnogs/lib/python3.9/site-packages/requests/sessions.py", line 701, in send

Mar 03 12:52:59 raspberrypi satnogs-client[427]: r = adapter.send(request, **kwargs)

Mar 03 12:52:59 raspberrypi satnogs-client[427]: File "/var/lib/satnogs/lib/python3.9/site-packages/requests/adapters.py", line 578, in send

Mar 03 12:52:59 raspberrypi satnogs-client[427]: raise ReadTimeout(e, request=request)

Mar 03 12:52:59 raspberrypi satnogs-client[427]: requests.exceptions.ReadTimeout: HTTPSConnectionPool(host='network.satnogs.org', port=443): Read timed out. (read tim>

`