Currently auto-vetting isn’t available for CW signals, but automatic decoding is.

I do concentrate my pass collection priorities on satellites doing CW telemetry, at least on some modes of operation.

I do observe most of the decoding are glibberish, but when resorting to the actual audio the signal is strong. As a frequent contester I can decode fairly fast CW signals under very marginal conditions, have play with automatic decoding algorithms as well.

The dynamics of the CW decoding is that it requires at least 6 dB of SNR to be decoded comfortably by ear (marginal receiving with great effort can be lower than that) but this is using the ear-brain combination which is known to add some advantage on the equation. Some claims this advantage is as high as 10 dB, by experience with decoding algorithms I would be more conservative and say it’s circa 6 dB.

So, automatic decoding algorithms should have a strong barrier on a SNR of 12 dB, being able to copy above and not below.

Obviously SNR goes up as the bandwidth goes down, but there is a practical limit on the nature of the DSP filters required for very narrow processing, specially with FIR filters. For slow signals a 15-25 Hz filter should be enough, and most satellite’s telemetry data is at 10-15 wpm or so to increase the likelihood of being took by novice operators.

The signals I’m hearing on the pass sound track is for moments quite solid, well above the 12 dB level (by trained ear) still the decoder reports plenty of “E” and “T”, which is the fingerprint of a filtering problem as noise is usually confusing algorithms to be either Es or Ts (perhaps also Is or Ms showing fast or slow adapting algorithms).

Modern algorithms override this condition with the so called “bayesian” or “adaptive” filters, some tests with Kalman filters also has been performed with success. Examples of actual implementations are at the top of the food chain CW-Skimmer (on top of which the global Reverse Beacon Network has been built, reporting thousand of spots per minute worldwide), and also CWGet and FLDigi, pretty much the rest are either copycats of the above or old generation “length counting” algorithms. Algorithms are not public, but the general principles are kind of known.

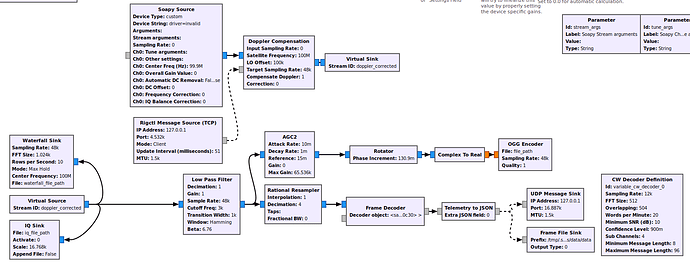

I’m interested into understanding the architecture of the satnogs decoder in general, the CW decoder in particular and ways to evaluate (even test on my own) some alternatives.

¿Is there any pointer to documentation where a description of these aspects of the system are commented? Or perhaps pointers to discussions and forums? Starting afresh on a topic on this system on some topic pretty much looks like trying to find a needle on a haysack.

73 de Pedro, LU7DID