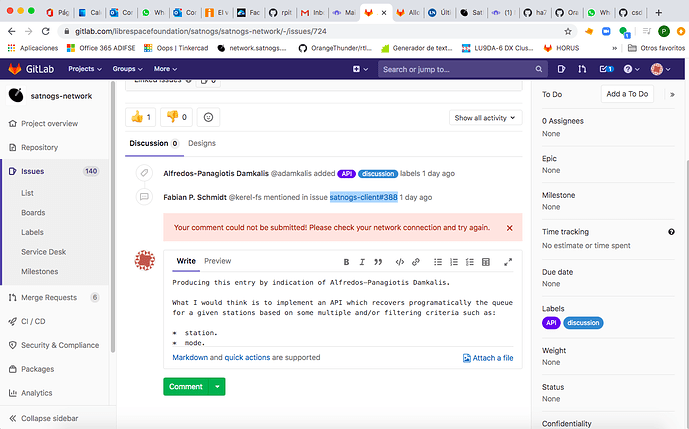

Thank you for your detailed response.

I believe you’re all struggling with the fine tuning on the “centralized vs. de-centralized” conumdrum on how to manage this network.

I’m not requesting nor suggesting auto-vetting, as it is something that might require considerable heuristics behind to avoid type I or type II situations.

What I’m suggesting is tools in the hands of the station owners that helps to automate the maintenance doing what station owners can do anyway (at much greater effort) such as deleting observations (either actually deleting them or marking them as failed or not success) with some criteria.

Since development is always something requiring effort and this might be directed to other better uses what I’m proposing is an API, that could only be executed by owners doing what owners can do. With that tool owners can do their maintenance chores, only more efficiently.

The “open all in tabs” trick is as older as the injustice but it’s too little as a help. The numbers of manual interaction seems a bit too optimistic, 3-5 minutes per batch of 50 observations is way too unrealistic, 10 minutes or even further driven by server response time and bandwidth limitations seems more realistic. I’m currently have over 900 observations sitting waiting for me to expend 3-4 hours of continuous point and click to classify. Many of them are known failures. It’s hard to expend such time.

Having an API available experienced developers like me can develop tools, and then share them if they proove to be useful, or keep to themselves if not, to classify with known patterns such as not having sound register of a pass or certain satellites or certain patterns (i.e. eclipse pass of a satellite with dead batteries) or whatever else.

You should not think too much on analyzing the impact of things a determined owner can already do only expending much time and effort.

Autoschedulling API and tools are a good example, a clean implementation allows for a better management of our own node instead of the ridiculous manual point and click with a two day horizon used before.

Administrator has access to the data base and I have no doubt in my mind they can mass process things, so perhaps it’s time for them to consider to release that grip to the station owners on their own station. Something in that tune is far (far) less effort to implement which any GUI or user driven interface to do anything, its just a wrapper to the database showing a station owner view of their own data with some controlled interactions over them in order not to screw somebody´s else data.

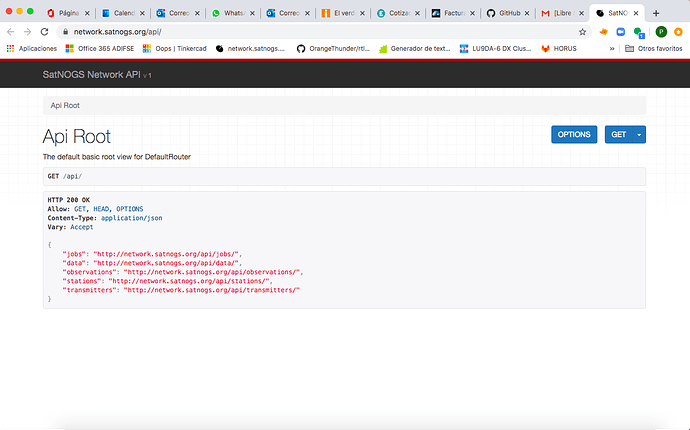

A concrete proposal. An API to be able to list all past passes with some filters such as satellite (NORAD), current status (planned, pending, vetted good, vetted bad or even failed) or a combination of them, within some time window (expressed in datetime from/to), and allowing to mark them as FAILED.

This way you have zero risk of falling into a type I or type II problem while vetting and leaving a corrupt vetted pass.

Simple scripting will do the rest.

Thanks for your attention, Pedro LU7DID